Related Works

Experience replay buffer and the approximation of the real action(or state) function share a common idea: the sequence of observed data encountered by an online RL is no-stationary, and on-line RL updates are strongly correlated. Aggregating over memory in this way reduces non-stationarity and decorrelates updates, but at the same time limits the methods to off-policy algorithms.

Experience replay has several drawbacks:

- it uses more memory and computation per real interaction;

- it requires off-policy learning algorithms that can update from data generated by an old policy.

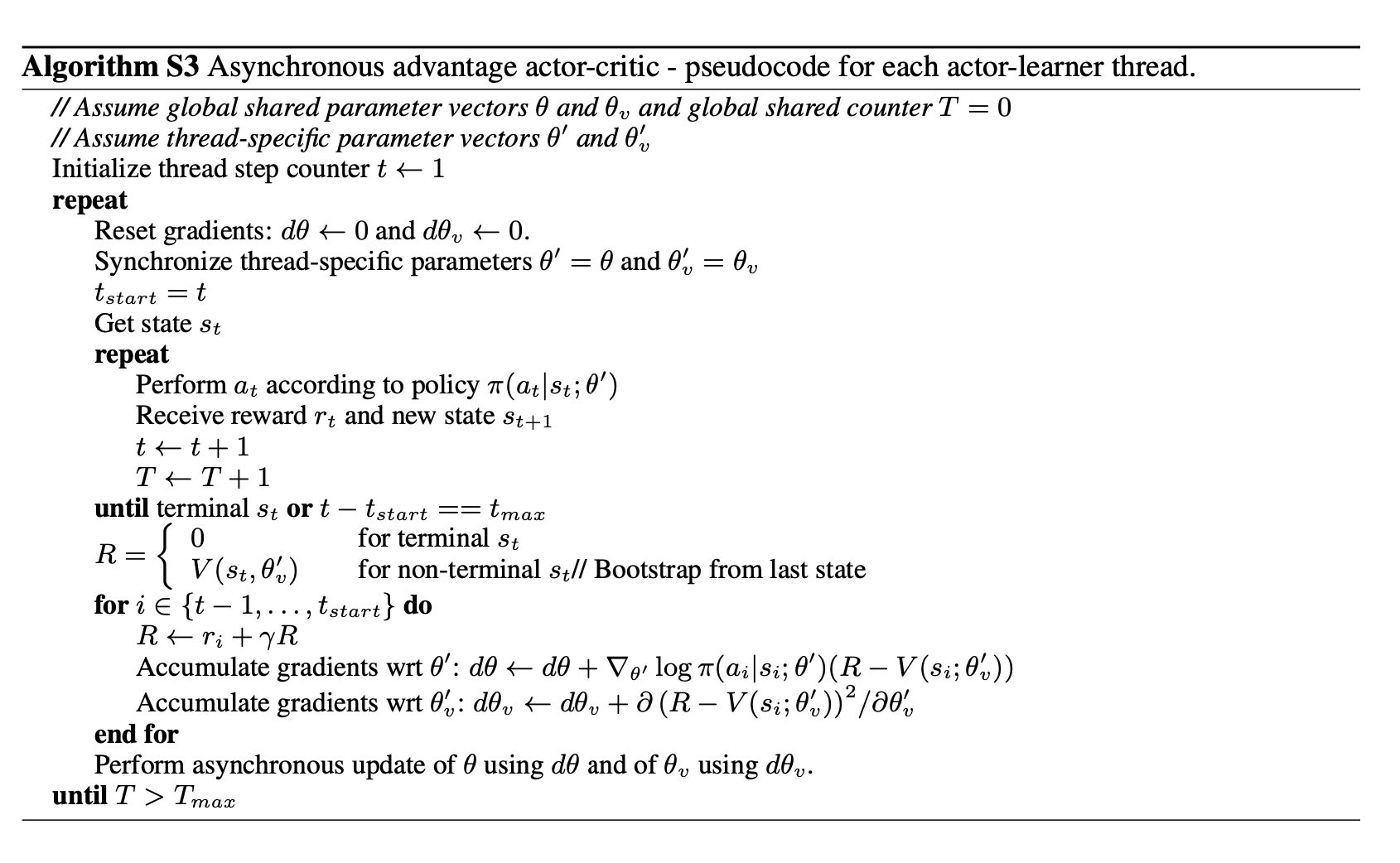

In this paper, we asynchronously execute multiple agents in parallel instead of experience replay. This parallelism also decorrelates the agents’ data into more stationary process. So A3C is a on-policy algorithm.

Methods

- The policy and the value function are updated after every actions or when a terminal state is reached.

- While the parameters of the policy and of the value function are shown as being separate for generality, we always share some of the parameters in practice. We typically use a convolutional neural network that has one softmax output for the policy and one linear output for the value function, with all non-output layers shared.

- Adding the entropy of the policy to the objective function improved exploration by discouraging premature convergence to suboptimal deterministic policies.